dnsmasq Everywhere

August 09, 2018dnsmasq is one of those pieces of software that you might not be familiar with. Personally, on all new deployments for employers or customers, I advocate for dnsmasqing all the things.

dnsmasq, at a high level, is a DNS resolver. However, it’s decidedly not BIND. Think of it more as a smart DNS router and caching layer.

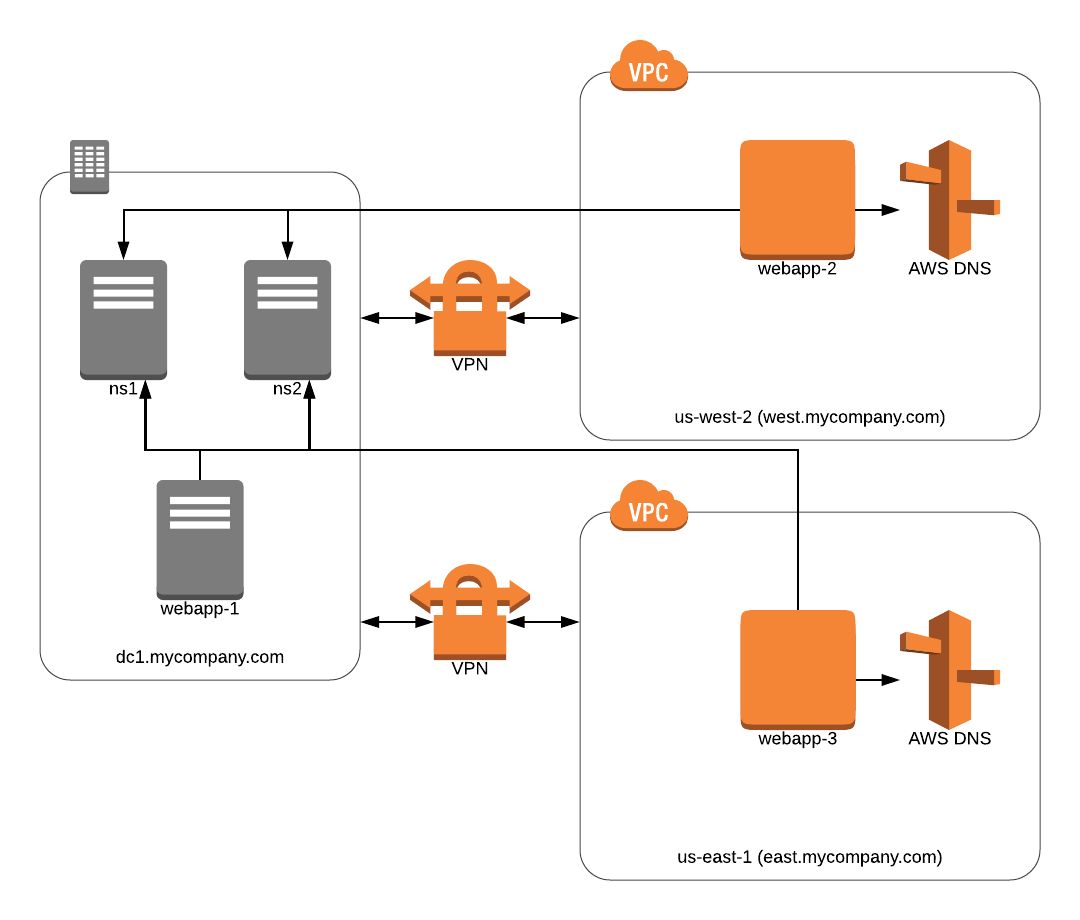

Consider the following network layout:

This is based on a real environment that I worked with, the names have been changed to protect the guilty.

In a nutshell, there was a VPC in Amazon in us-east-1, a VPC in Amazon in us-west-2, and a datacenter somewhere in between. The VPCs in Amazon could not talk to each other, but could each communicate with the datacenter. The web applications in the diagram all ran the same codebase with the same configuration, using a MySQL cluster located in the datacenter. Yeah, it’s quite inefficient to query a MySQL cluster across the continental US, but this isn’t a contrived example: this was something we had to support.

In order for the VPCs to resolve the IP addresses of the MySQL cluster, we injected the IP addresses for ns1 and ns2

into Amazon’s DHCP option set, yielding three resolvers: our two resolvers and the Amazon provided DNS resolver.

After vigorous testing, we brought us-east-1 online to begin serving traffic, using a CDN to geographically route to the nearest set of servers. Almost immediately, our average request time skyrocketed. We immediately suspected that this was related to MySQL, but after a lot of digging, it turned out to be DNS.

If you’ve been in the industry long enough, this haiku will strike home and strike hard.

What happened? Well, it turned out that all DNS queries were crossing the US. Where can I find Google.com? Here, lemme ask the west coast about that. Where can I find east.mycompany.com? Here, lemme ask the west coast about that. Not only would it cross the US once, for resolving local DNS entries, it would cross the US twice until it failed over to using the local Amazon DNS provider.

This was bad. However, a quick web search for “dns caching resolver” brought up dnsmasq. What we subsequently did was to install dnsmasq on all of our servers, using a dhclient script to prepend 127.0.0.1 as the first and primary resolver. This meant that all DNS queries would go to the local dnsmasq service.

The first order of business was to figure out a way to prepend 127.0.0.1 as the first resolver. On

CentOS 7 at least, this was accomplished by creating /etc/dhclient.conf with the following contents:

prepend domain-name-servers 127.0.0.1;

After this was created, systemctl restart NetworkManager.service correctly updated /etc/resolv.conf with our local

resolver as the first resolver:

# Generated by NetworkManager

search nowhere

nameserver 127.0.0.1

nameserver 10.0.2.3

options single-request-reopen

Note that 10.0.2.3 is the Amazon provided DNS resolver.

All DNS entries have a time-to-live or TTL value specifying how long, in seconds, that a record should be considered valid for. This is the basic premise of DNS: things change, but to make things more efficient, cache them as long as they are to be considered valid. Just like your CDN should be caching your assets for as long as it is reasonable to do so, DNS entries should be cached as long as they are allowed to live.

Unfortunately, as in the haiku above, almost everything seems to get DNS wrong. It either doesn’t cache at all, or permanently caches records, leading to stale data.

As an aside, there is a bug in the ZooKeeper Java client libraries: it only resolves DNS names once. If you change an A record to a ZooKeeper node to point to a different host, the running JVM process will never be able to find it until you restart the process.

Therefore it’s either surprising or entirely not surprising that gethostname(3) makes no effort to cache values,

making things very inefficient for programming languages like PHP (which was the web application in question) in that

every HTTP request made by PHP to other services introduces whatever latency that gethostname(3) involves.

So what do we do, rewrite PHP? Find every bad DNS implementation in every library in every language? Well, we could, but I don’t know about you but I’ve got better things to do with my time.

Enter dnsmasq: a caching DNS resolver with smart routing rules. Requesting google.com the first time will take

tens to hundreds of milliseconds, but the next time, it’ll usually take less than a millisecond!

$ dig google.com | grep -P 'Query\sTime:'

;; Query time: 63 msec

$ dig google.com | grep -P 'Query\sTime:'

;; Query time: 0 msec

Sending and receiving UDP packets to the local interface is, unsurprisingly, pretty fast. If your application is making hundreds or thousands of DNS requests per second, dnsmasq will bounce these down into a single outbound request to a resolver, cache the value, and will re-request the record when the TTL is up, caching the old value until a new one is received.

Before we get to the final summary, here’s how we configured dnsmasq, this is /etc/dnsmasq.conf:

# listen only at localhost for security

listen-address=127.0.0.1

# drop privileges to user 'nobody' after binding port 53

user=nobody

# route requests to all backends

all-servers

# ns1.dc1.mycompany.com

server=/dc1.mycompany.com/10.100.0.3

# ns2.dc1.mycompany.com

server=/dc1.mycompany.com/10.100.0.4

And that’s it. dnsmasq will automatically discover the other resolver from /etc/resolv.conf, which is the Amazon

provided DNS resolver. All requests for DNS names within the datacenter will be sent to one of the two nameserver

instances in the datacenter; all other requests will be sent to the local Amazon resolver. In Amazon, the IP of your

nameserver is somewhat deterministic as being the third host in your subnet, in Google Cloud, this value is always

169.254.169.254, but it’s not necessary to hardcode it into the dnsmasq configuration: if it’s in /etc/resolv.conf,

dnsmasq will detect it automatically.

Not only did this fix our issue, but it made everything across all of our servers that involved DNS faster.

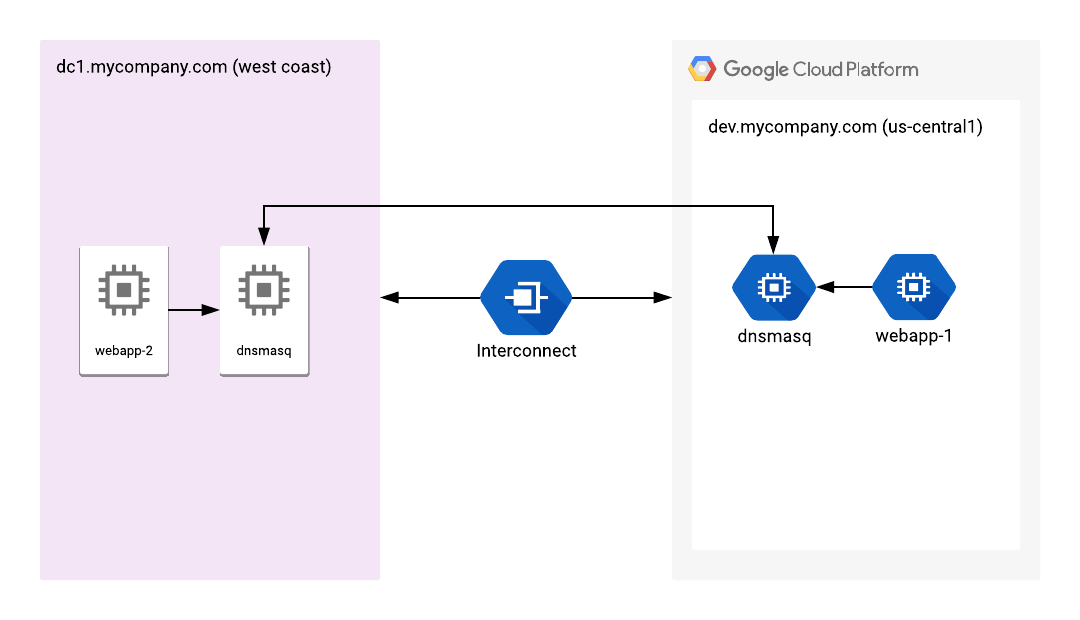

Finally, let’s consider the following example:

In our previous example, we only needed unidirectional DNS; our instances in AWS needed to be able to resolve names in our datacenter, but the datacenter didn’t need to resolve names in AWS. In this new example (also something that I helped build), we consider a setup for bidirectional DNS resolution between the datacenter and GCP.

This is a two-tier caching setup for bidirectional name resolution. A DNS query from a process goes to the local dnsmasq on the local machine, it is then intelligently routed based on the zone name to the local “bridge” dnsmasq instance within the network, which then routes the request over the interconnect to a dnsmasq resolver on the other side.

This does exceed the bare minimum of what would be required for bidirectional resolution, but not by much. The bare minimum would consist of two DNS servers, one on each side of the connection between networks. We simply enhance this bare minimum by adding caching as a feature in multiple stages.

I’ve seen extremely large deployments (tens of thousands of servers), and at that scale, you’d be surprised at how the oft-forgotten low-level network primitives like DNS can flare up to cause colossal problems. The architecture diagrammed above uses multiple caching layers to dramatically step down the amount of load placed on DNS resolvers in addition to making most queries much faster.

5,000 servers request a DNS record at localhost, then at the dnsmasq instance within the local network, then at the dnsmasq instance within the remote network, if that’s where the request is heading. At each stage, we step-down the number of requests, and resolved names are only re-requested when they expire. 5,000 DNS requests to a single dnsamsq instance will result in a single DNS query from that dnsmasq to its configured resolver for that zone. These instances will then all cache DNS locally as well, dramatically reducing the pressure on resolvers.

So that’s it. There is a lot more that dnsmasq can do, but we’ve seen how simple it is to deploy and how it can reduce request latency for everything that uses DNS, which is almost everything on your system.